into hanchine-月博登录中心入口

point cloud filtering is a common function for point cloud data processing.

the images captured by the camera are limited by shooting conditions, lighting, surface reflections of objects, and angles of fill light, etc., which will cause the image quality of the camera to decrease, which will result in some noise during point cloud reconstruction. including flying points, dispersive points, and even points that appear out of thin air, so if you need to get a high-quality point cloud, you can choose to filter the point cloud data once to improve the data quality.

of course, there are many ways to improve quality, such as adjusting the lighting from the environment, adjusting the shooting angle, hdr, parameter adjustment, or algorithm adjustment from the shooting. this article will first start the discussion based on point cloud filtering.

this article describes the filtering method based on neighborhood filtering. as the name implies, it will calculate the number of valid points in each point and the surrounding neighborhood, and determine whether the current point needs to be filtered according to the degree of correlation.

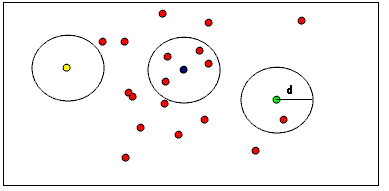

after planarizing the point cloud in 2d, as shown in the figure above:

(assuming that red is the neighborhood point, and the radius d is the neighborhood distance threshold)

the neighborhood related situations of each individual point cloud are generally divided into three types:

yellow point: there is no related point in the neighborhood, it is directly excluded as noise

blue point: there are a large number of related points in the neighborhood, which are reserved as valid points

green dots: there are related dots in the neighborhood, but the number is insufficient, and they are also excluded as noise

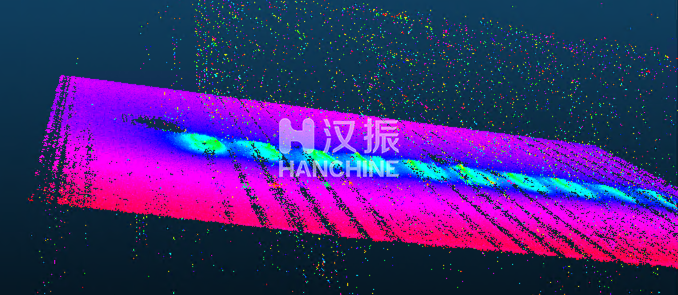

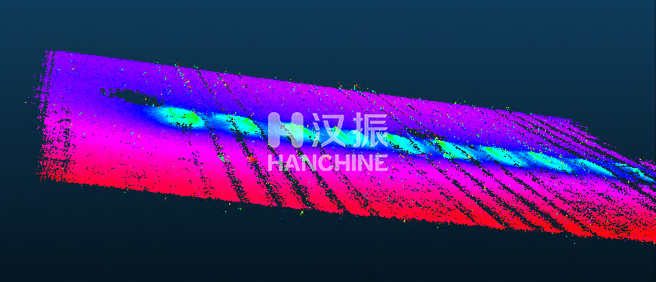

according to the principle of neighborhood filtering, most noise points can be resolved, and the effects are as follows:

before filtering

after filtering

in order to achieve filtering, the distance threshold between point clouds needs to be prepared first, that is, the radius d in the schematic diagram. generally speaking, the average distance between point cloud points*6 can be used. the larger the parameter, the worse the filtering effect. the smaller the parameter, the better the filtering effect. however, if it is too small, effective points may be filtered out.

secondly, it is necessary to prepare a threshold for the number of correlations in the neighborhood, that is, there must be at least a few point clouds in the neighborhood to retain the current point. the algorithm is implemented to search for a total of 44 points in the neighborhood, and if there are more than 6 points, it is judged to be reserved. the larger the parameter, the better the filtering effect, the smaller the parameter, the worse the filtering effect.

through the combination and tuning of the two parameters, it can already meet the point cloud filtering requirements in most scenarios.

the general process is as follows:

-

traverse each point in the point cloud data

-

according to the distance threshold, check the number of related points in the neighborhood of each point

-

determine whether the point cloud data is retained according to the relevant number threshold

-

according to the retention result, copy the point cloud data to the new data buffer

the implementation code is as follows:

in hanchine 3d development sdk, you can directly call the point cloud filtering interface, and the usage method is as follows:

the parameters are explained as follows:

-

buffer: store basic data of point cloud, need to store xyz data in 3-channel float mode

-

targetdata: filtered result data, store xyz data in 3-channel float mode

-

1920: number of point cloud columns

-

1200: number of point cloud rows

-

0.5: average distance of centroid, which can also be understood as the average distance between point cloud points

-

30: threshold of distance between clouds

with ideas, the effect is not enough, the code needs to run fast to really come in handy. for the point cloud filtering algorithm, there are the following acceleration ideas:

01 multi-threaded parallel

that is, the coreutilsconcurrent part of the code. in this algorithm, the point cloud is divided into rows and distributed to 4 threads for separate processing. the speed can be increased by 2 to 3 times under 4 threads. one thing to note here is that in current cpu-based operations, more threads are not the better, and need to be adjusted according to the actual algorithm. for this algorithm, 4 threads are appropriate, even if the cpu physically has 8 threads or even 16 threads. the main reason is that the algorithm is relatively simple, and the memory bottleneck is more likely to occur than the cpu computing bottleneck. too many threads can easily cause resource contention, reduce cache hit rate, and cause performance degradation.

02 neighborhood correlation calculation pruning

that is, the part with the largest amount of code in the code loop. the neighborhood search logic in the algorithm is to start from the current row of the point cloud, and search -1 row, 1 row, -2 row, 2 row, -3 row, 3 row in turn. combined with the actual point cloud noise distribution in the physical world, if no valid points in the neighborhood are found in the current line and -1 line, then there is a high probability that the valid points in the neighborhood cannot be searched in the 1 and -2 lines. , so the current point is basically an invalid point and can be deleted directly. according to this logic analogy, set the exit conditions of 1, 3, and 5 neighborhood valid points respectively. after this pruning, the speed can be increased by 2 to 4 times.

03 memory access sequence optimization

because the cpu has the problem of cache seconds, we hope that the developed program can increase the cache hit rate as much as possible to increase the running speed. to access point cloud data, try to access from left to right and top to bottom in memory.

04 code reduction judgment condition

part of it can be expanded inline, and the judgment of the completion of the code structure can be realized directly by writing code instead of using for and function calls.

05 reduce object copy

try to save hotspot variables through global variables. for example, point cloud data pointers, height and width and other parameters are frequently used. they can be saved in global variables so that subsequent processes can be directly accessed, instead of being saved to intermediate variables and repeatedly copied or used by pointers repeatedly de-pointing.

after optimizing the above points, the 230w point cloud filter can be controlled within 5 to 15ms.